By an engineer with aerospace and control-systems background now applying those principles to LLM architectures.

The limitation in today's LLM systems is not model capacity.

It is architecture.

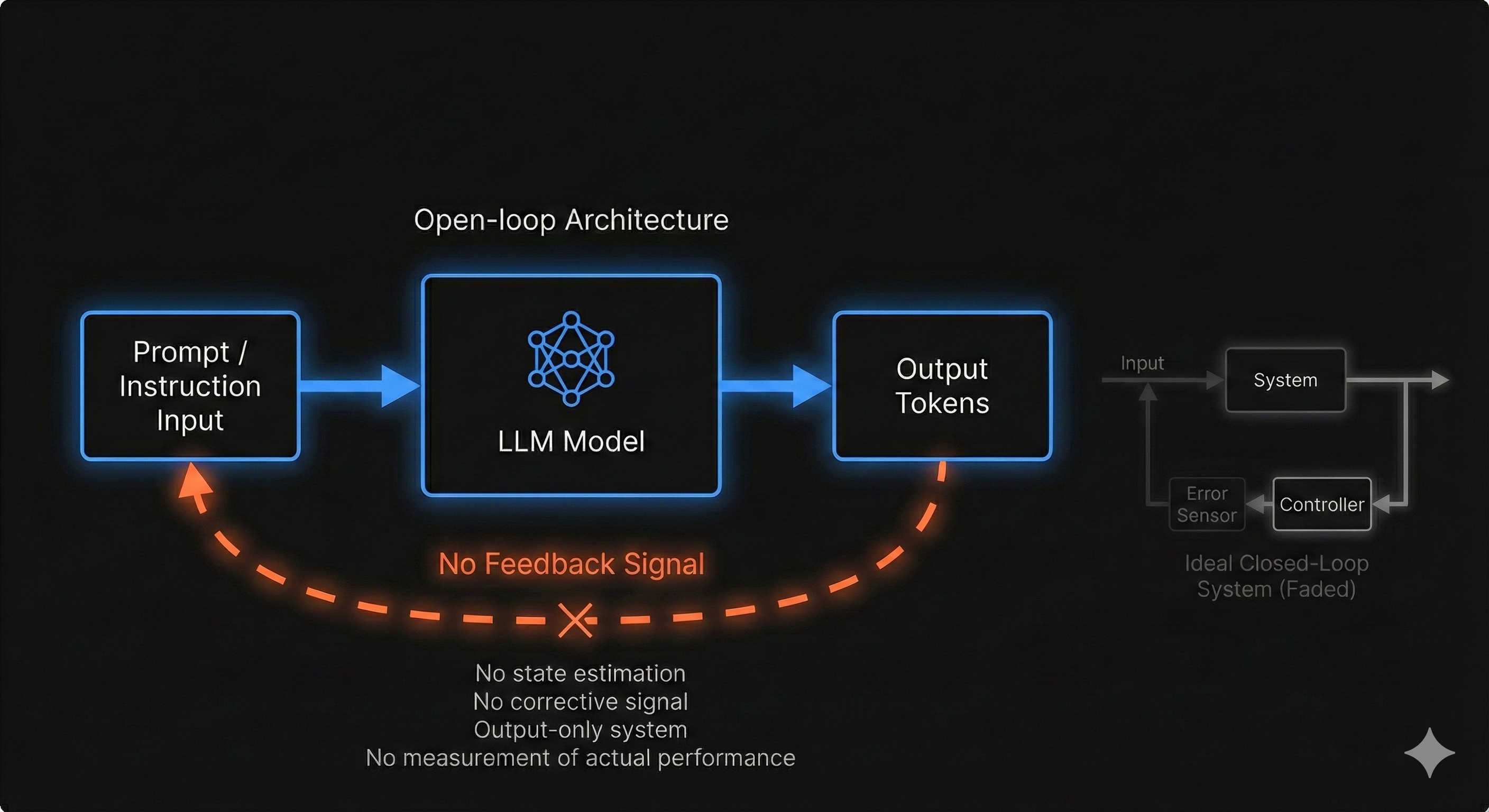

After working in aerospace systems—domains where instability is physically catastrophic—I see the same architectural gap repeating in modern AI: most LLM pipelines operate as open-loop controllers, systems that cannot stabilize themselves because they do not measure or correct their own behavior.

This is not a philosophical critique; it is a structural one.

The field has made meaningful progress with evaluations, RAG, multi-step reasoning, tool use, and agent frameworks. But these all improve the initial feed-forward signal, not the system's behavior during execution.

This is exactly the gap classical engineering solved decades ago.

Open-Loop Behavior: The Fundamental Source of Instability

In aerospace and robotics, an open-loop controller sends a command without checking if reality matched its assumption.

The result is immediate instability.

LLM systems typically follow the same pattern:

- Construct a prompt

- Retrieve some documents

- Generate a plan

- Execute blindly

- Assume it worked

Reality rarely matches the plan. With no measurement and no correction, the system drifts or collapses silently.

A controller without feedback cannot stabilize itself.

Neither can an LLM agent.

Closed-Loop Control: The Layer AI Is Missing

Every autonomous engineered system is only reliable because of closed-loop control:

- Observe the actual state

- Compare expected vs actual

- Compute the error

- Correct the next action

- Repeat continuously

That loop is what keeps rockets stable, drones hovering, and robots upright.

LLM systems need the same fundamental architecture.

Closed-loop LLM systems continuously:

- extract sensory state (DOM, screenshot, logs, metadata)

- detect divergence

- adjust trajectory

- re-plan locally

- stabilize behavior dynamically

This is not prompting.

This is not RAG.

This is control architecture.

Why "Almost Closed-Loop" Is Still Open-Loop

A subtle but important point:

Closed-loop is not a vibe.

It is a mechanism.

Many LLM systems today appear closed-loop because they incorporate humans or heuristics in the process:

- humans reviewing eval results

- engineers selecting a better prompt

- researchers swapping techniques

- ops teams adjusting settings after failures

- agents looping until "confidence is high"

This is not closed-loop control.

It is human-mediated iteration, which is fundamentally:

- slow

- inconsistent

- non-deterministic

- not self-correcting

- not automated

- not guaranteed to converge

From a control perspective:

If the system cannot sense its own error and autonomously correct it, the system is still open-loop.

Real closed-loop systems self-improve during execution, not after human review.

Human patching is supervision.

Closed-loop is autonomy.

The difference is the difference between:

- tuning a rocket's PID controller on Monday,

- vs

- the rocket dynamically adjusting thrust every millisecond during landing.

One is design-time optimization.

The other is real-time stability.

LLM systems need the latter.

Modern LLM Techniques Improve the Command, Not the Control Loop

Let's evaluate the core techniques used today.

1. Evaluations (Including Prompt Evals)

Teams use evals to refine prompts, rank chain-of-thought patterns, and iterate designs.

That is useful engineering work.

But evals are entirely offline:

- they run after the system performs

- humans interpret the results

- humans decide what to change

- the model does not self-correct during execution

In control-theoretic terms, this is:

improving controller parameters before takeoff

not actually measuring errors during flight

Evals reduce design-time error;

they do not introduce runtime stability.

2. RAG Pipelines

RAG gives the model a better initial world model.

But a better map is not a sensor.

RAG ≠ observation.

RAG ≠ validation.

RAG ≠ correction.

It is still open-loop.

3. Tool Calling

Tools extend capability, but almost all implementations are:

LLM → Tool → Response → Next Step

with no machinery for:

- checking correctness

- modeling error

- adjusting parameters

- rejecting invalid states

This is actuators without sensors.

4. Chain-of-Thought and Planning

Planning extends the feed-forward chain but doesn't stabilize it.

A longer open-loop chain is still open-loop.

No autonomous vehicle, aircraft, or robot navigates this way.

5. Agent Frameworks

Most frameworks orchestrate multiple actions, but they lack:

- explicit expected → actual state comparison

- error signals

- dynamic correction policies

- state stabilization mechanisms

- convergence criteria

They coordinate.

They do not control.

True Closed-Loop Architectures Must Be Engineered

Closed-loop is not something that emerges from prompting tricks.

It must be architected into the system.

A closed-loop LLM agent must:

- Measure the real-world state

- Detect deviation from the predicted state

- Identify error mode

- Apply corrective action automatically

- Continue until stable

Only then does the system behave like a real autonomous process instead of a scripted oracle.

This is the exact architecture used in:

- flight control systems

- self-balancing robots

- precision landing

- industrial robotics

- autonomous vehicles

AI is no exception.

Intelligence without feedback is just open-loop reasoning.

Conclusion: Stability Is Not Optional

LLMs generate intelligent actions.

Closed-loop control stabilizes those actions.

The field is advancing rapidly, but it is advancing primarily in feed-forward directions — better prompts, better retrieval, better plans, better models.

The next layer is not more reasoning.

It is the architectural machinery that every autonomous engineered system relies on:

feedback, error correction, state tracking, and closed-loop stabilization.

Without that layer, LLM systems will always behave like a self-balancing robot with its sensors unplugged — impressive, powerful, but fundamentally unstable in the real world.

Closed-loop is the line between fragility and autonomy.

And the field is about to cross it.